Look, we were loyal ChatGPT users. We gave it every chance. We updated. We subscribed. We built workflows around it and trained the whole team on it. But somewhere between the forgotten context and every update somehow making it worse, we had to have The Talk. It wasn’t us. It was GPT.

Here’s the thing though. When we started digging into why it wasn’t working, we realised the problem was bigger than ChatGPT getting worse. The problem was that we’d been choosing AI tools the wrong way entirely.

Short Memory and Shorter Attention Span

Here’s the assumption: the smartest model wins. Better model, better output, better results. Pick the one with the biggest parameter count, build your workflows around it, job done.

Sounds logical. It’s also wrong.

We ran ChatGPT as our daily driver for months. Built prompts. Trained the team. Went all in. And the memory was like talking to someone who forgets your name every five minutes. You’d build up a brief over ten messages, then it’d lose the first seven. Instructions it used to follow got ignored. Context it should have retained vanished three messages into a conversation. Infuriating.

Claude holds context better, but the real shift is custom skills: you teach it reusable behaviours for specific situations. We’ve set ours up so “write a client brief” triggers our exact template, tone, and structure. “Do a content refresh” follows our actual process. Not a generic one it’s guessing at. The AI learns your shorthand, and that compounds. ChatGPT never got smarter the more you used it. Claude does.

Every GPT Update Felt Like a Downgrade

There’s a special kind of frustration that comes with watching a product you rely on get worse with every iteration. Tasks that GPT-4 handled cleanly started falling apart after updates. Every update felt less like an upgrade and more like someone had quietly lobotomised the thing overnight.

Meanwhile, other LLMs (Claude included) were making visible, tangible improvements with each release. The gap wasn’t closing. It was widening.

But here’s the thing nobody’s talking about enough: AI models are converging. Fast. GPT-4, Claude, Gemini. They can all write a decent email, summarise a document, and pretend to understand your brand voice. The raw intelligence gap between frontier models is, for most real business use cases, basically irrelevant.

Which means the model is no longer the product. The product is the product. We call this The Wrapper Problem. Most AI tools are the same brain wearing different outfits. The model does the thinking. The product just… wraps it. A chat window here, a dark mode there, maybe a plugin marketplace nobody uses. It’s like choosing a car based on the engine spec sheet and ignoring the fact that one of them doesn’t have a steering wheel.

The teams that figure out The Wrapper Problem early, that stop choosing AI tools based on the engine and start choosing them based on the car, are the ones that’ll actually get somewhere.

Claude Actually Does Things on Your Computer

ChatGPT is a very smart text box. You type, it talks, you copy-paste the output somewhere useful and spend an hour formatting it. That’s it. It lives in its app and it’s perfectly happy staying there.

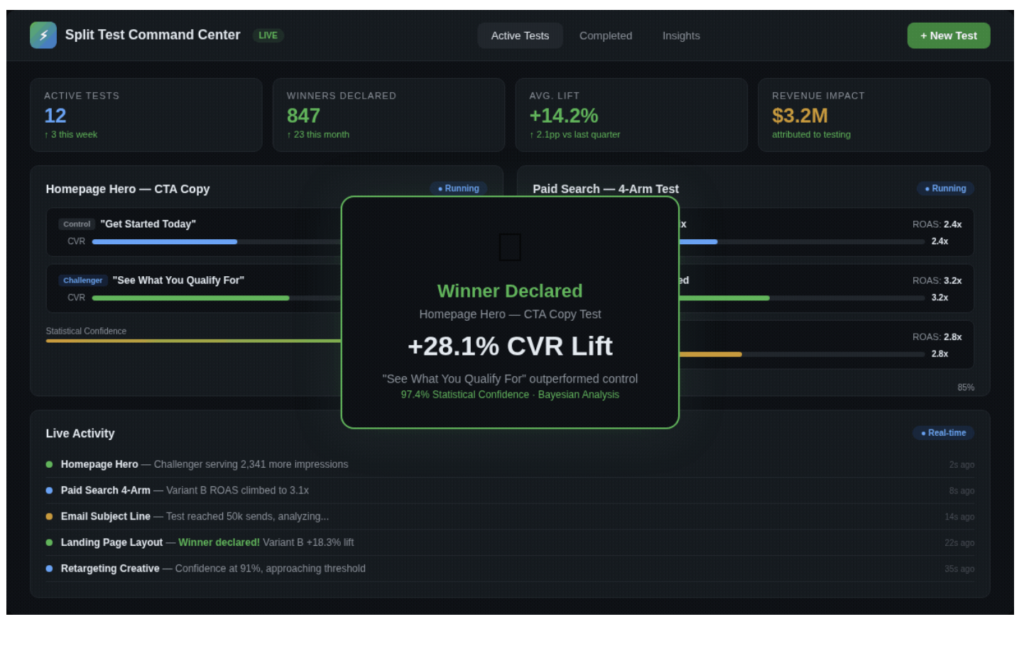

Claude, through Cowork and Claude Code, actually builds things. Presentations. Documents. Diagrams. Code it then runs. I built a fully functional testing app in about 20 minutes, with the agent taking every action on my behalf. No developer. No sprint planning. No two-week wait. Twenty minutes from idea to working product. Try doing that in ChatGPT.

For a team of marketers and strategists (not developers), this is the difference between “here’s some text you could use” and “here’s the finished thing.” One saves you a draft. The other saves you an hour.

You don’t need to be technical to get Claude to do technical things for you. It meets you where you are. ChatGPT expects you to bridge the gap yourself.

How to Actually Evaluate AI Tools

If you’re making this decision right now, stop comparing models. Start comparing products. Specifically:

Test with real work, not party tricks. Everyone demos well with “write me a poem about a sunset.” Try “build a competitive analysis for our Q3 pitch in our standard format using our brand voice.” That’s where the differences get embarrassing.

Count the steps between “I need this” and “it’s done.” Can the tool create the actual deliverable, or does it create a draft you then spend an hour turning into a deliverable? Fewer steps wins. Always.

Check if it works for a team or just one person. A tool that’s brilliant for you but impossible to collaborate on is a productivity island. Great for solo work. Useless for an agency.

Watch what they ship, not what they announce. ChatGPT kept announcing things. Claude kept shipping things we actually used. There’s a difference, and it shows up about three weeks into daily use.

The Takeaway

We didn’t switch from ChatGPT to Claude because of a benchmark. We switched because one tool kept making our team slower and the other kept making us faster.

The model wars are converging. The product wars are just getting started. And right now, Claude is winning that race not because it’s wrapped in the fanciest interface, but because it’s built around a simple idea: AI should do the work, not just talk about it.

Pick the tool that finishes the job. Not the one that starts the conversation.